AI at scale: Engineering trust for federal impact

Many federal AI pilots stall because they’re treated as innovation theater: exciting demos with little operational rigor. But in high-stakes environments like federal health, national security, and regulatory oversight, agencies need more than vision. They need AI they can trust.

Trust is engineered, not assumed

Trust in AI isn’t a soft concept. It’s built through architecture, governance, and transparency. That’s not just our perspective: it’s referenced in an April OMB Memo, which calls out governance as “an enabler of effective and safe innovation.”

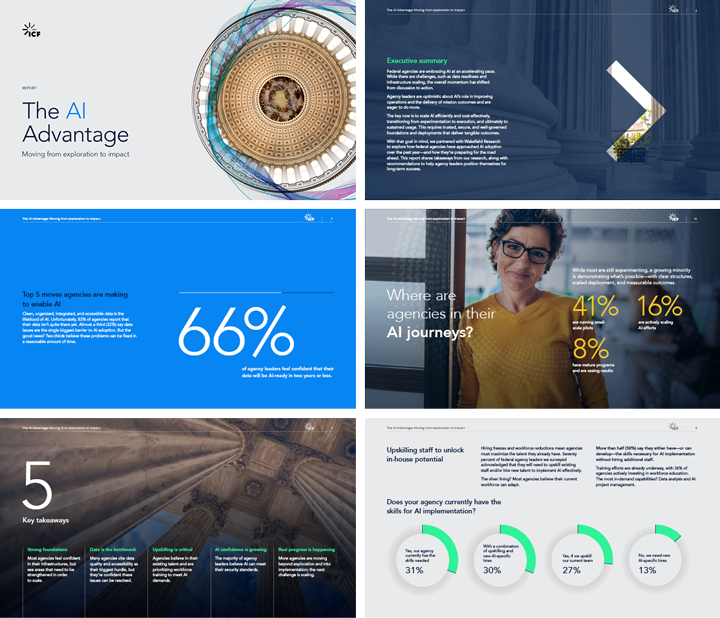

Our latest AI research shows that nearly half of federal agencies are running AI pilots, but only 8% have scaled them into full production.

In traditional enterprise software, we expect audit trails, version control, and explainability. We should expect the same with AI. There must be a way to validate results and mitigate risk, especially with large language models (LLMs), where outputs can be inaccurate and non-transparent.

Some leaders worry that this kind of rigor will slow innovation, but the opposite is true. Moving fast without governance is a false economy. It leads to rework, reputational risk, and stalled adoption. Rigor isn’t a brake on AI: it’s the foundation that allows agencies to scale responsibly.

3 ways to build trust and scale AI responsibly

Whether it’s a policy reference, data source, or content origin, agencies need to know where AI-generated insights come from. This knowledge builds confidence, supports validation, and reinforces accountability, and acquiring it doesn’t have to be cumbersome.

1. Embed data lineage and explainability.

Data lineage maps show how data flows through systems, while explainability shows why an AI model made a specific choice. Together, they give leaders the confidence to act on AI-driven insights in regulated or high-impact environments. Recently, we designed a cloud-enabled data platform for a federal client that embedded data lineage as a core architectural feature, using metadata, tagging, and governance tools to track lineage and source attribution. This enabled the agency to validate insights, reduce compliance risk, and confidently scale analytics across teams.

2. Validate outputs with human oversight.

In sensitive areas like health and policy, AI can’t operate on autopilot. Its outputs must be reviewed by people who understand the mission. When we partnered with a federal health agency, we built a generative AI assistant trained on approved content and designed for reuse across teams. Human validation kept outputs aligned with policy and context, while source citations ensured that every recommendation could be traced back to its origin. This made the solution both scalable and trustworthy.

3. Treat AI as a core system, not a side project.

Scaling requires integration into existing workflows so AI delivers real value without disrupting mission-critical operations. When AI is treated as infrastructure, it earns the oversight, investment, and trust needed to move from pilot to platform. That includes building in mechanisms for source traceability so that every insight, prediction, or recommendation can be audited, verified, and defended.

From risk management to impact

This shift isn’t just about reducing risk. It’s about unlocking impact. Agencies that invest in trust scale faster, deliver smarter, and earn stakeholder confidence.

We’ve seen this firsthand with ICF Fathom, a platform we built to put these trust principles into practice. By embedding lineage, explainability, and human oversight throughout the AI lifecycle, Fathom helps agencies move confidently from pilot to production, unlocking real mission impact without compromising security and accountability.

Final thought

AI won’t scale on vision alone. It scales on trust through lineage, governance, and disciplined integration. Agencies that engineer trust move faster, deliver smarter, and build stakeholder confidence. If your agency is ready to go from exploration to impact, the time to start is now.