How traditional modernization holds back federal AI—and what to do

Artificial intelligence is reshaping how federal agencies deliver on their missions, offering new pathways to efficiency, insight, and service delivery. However, AI’s rapid evolution poses a challenge to traditional digital modernization methods, especially those that depend on fully defining system requirements and specifications upfront.

For decades, agencies have relied on detailed requirements to manage risk in a relatively stable technology environment. That approach makes sense when system needs are well understood and technologies are mature. But AI doesn’t operate on those terms. When the technology shifts rapidly, the greater risk lies in locking into a rigid set of requirements that cannot adapt as the landscape changes.

The result? Agencies may end up with solutions based on outdated algorithms or incomplete datasets—spending months and millions only to miss the mark.

To capitalize on AI’s potential while maintaining appropriate oversight, agencies should adopt a dual-track approach: first, enabling controlled experimentation through AI incubators; and second, scaling proven capabilities through outcomes-based contracting.

Creating AI solutions in context

Agencies can accelerate their learning curve and reduce risk by testing AI applications within a secure environment before committing significant resources. Standing up an AI incubator, whether as a virtual sandbox or a dedicated pilot environment, allows mission leaders and developers to safely test models, tools, and workflows on agency-specific use cases and validate the potential of AI solutions.

Within such an incubator, AI can be applied to a range of mission challenges: automating manual data reconciliation, improving fraud detection, optimizing resource allocation, or accelerating case processing. The intent is to identify what actually works in the agency’s operational context—not just what looks promising in theory.

Agencies can support these incubators under flexible acquisition mechanisms such as time-and-materials task orders, firm-fixed-price prototype deliverables, or innovation-friendly vehicles like Other Transaction Authorities (OTAs) or Commercial Solutions Openings (CSOs), where applicable. These arrangements allow vendors to iterate quickly and demonstrate tangible outcomes without the burden of premature full-scale requirements development.

This model transforms the question from 'What can AI do?' to 'What can AI deliver for us?' helping program leaders make data-informed investment decisions while minimizing sunk costs and compliance risk.

Scaling smarter through outcomes-based contracting

Once promising prototypes emerge from the incubator, agencies can use outcomes-based contracts to scale them responsibly. Unlike traditional statements of work that prescribe how contractors must perform, outcomes-based approaches specify what success looks like in measurable terms tied to mission performance.

For example:

- Reducing benefit claims processing times by 25%.

- Detecting and preventing at least $10 million in fraudulent payments.

- Increasing data ingestion accuracy for analytics systems to 98%.

Such contracts align contractor incentives directly with mission outcomes rather than technical deliverables. They also encourage vendors to leverage emerging innovation, including AI techniques or tools that did not exist when the contract was awarded, so long as the agreed-upon results are achieved.

To manage performance risk, agencies can employ milestone-based payment structures, performance incentives, or phased task orders linked to quantitative results. This maintains accountability while preserving flexibility.

Importantly, the lessons from the incubator stage make this approach more viable: both the agency and the contractor enter the outcomes-based phase with shared data, validated use cases, and realistic expectations of what AI can accomplish in context.

Innovation that delivers results

AI presents immense opportunity but also exposes the limits of 'business-as-usual' modernization. Agencies that continue to rely exclusively on rigid, prescriptive acquisition and development processes risk falling behind, spending time and resources on systems that can’t evolve as quickly as their mission demands.

By pairing AI incubation with outcomes-based contracting, agencies can manage risk while fostering a culture of responsible innovation. This approach enables them to procure not just tools or code, but verified mission outcomes and solutions that are adaptable, accountable, and aligned with long-term public value.

As each successful AI implementation informs the next, agencies can create a continuous innovation cycle, a self-reinforcing loop, where lessons learned, validated tools, and mission insights drive future modernization at scale.

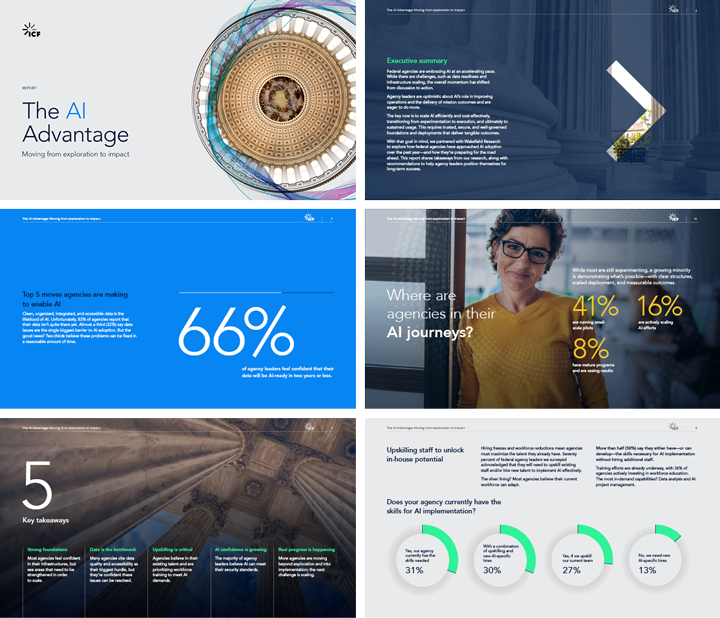

Accelerate your AI advantage

ICF Fathom helps federal agencies accelerate responsible AI adoption through a structured approach that blends strategy, prototyping, and implementation. Drawing on ICF experience delivering solutions across a variety of contractual models—from agile task orders to flexible innovation authorities—Fathom allows agencies to explore, test, and scale AI securely and effectively.

To learn more, visit icf.com/fathom or contact the ICF team.