Policy and regulatory

Our data-driven approach to regulatory analysis and policy powers a full spectrum of public services.

We combine our data analytics expertise with unmatched experience navigating the policy development process to help our clients solve some of the world’s most pressing public policy issues.

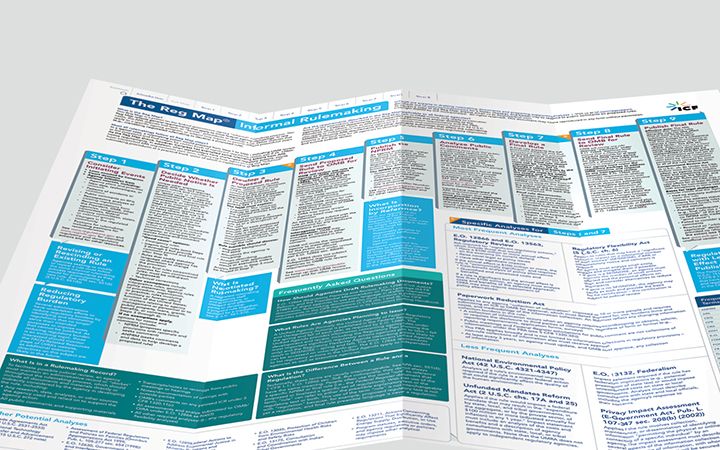

Navigate the regulatory process and avoid common pitfalls

As the leading provider of policy and regulatory development support to government agencies, we use the best available science and data to help agencies and policymakers in the United States, United Kingdom, and European Union develop effective public policy and implement effective regulatory programs.

Government policy development is by design highly technical and multidisciplinary—and successful implementation requires a broad range of expertise. Our economists, policy analysts, biological and physical scientists, lawyers, and data scientists work together with our industry experts to provide the full suite of services agencies need to develop, implement, evaluate, and improve policy outcomes.

Our solutions

Survey research

ICF offers comprehensive survey research services to help agencies understand the industries they regulate and to inform regulatory analyses.

Our work

Our technology

Featured experts

Related industries and services